CMB data analysis

Information about the Universe has to be derived from data sets collected by cosmological observatories. Nearly three decades now observations of the cosmic microwave background (CMB) have been the primary source in this respect. The detection, by an American satellite, COBE in 1991, and precise characterization of the statistical properties of the total intensity anisotropies culminated by measurements of the satellite mission, NASA’s WMAP and ESA’s Planck, have not only confirmed in great detail the overall standard cosmological model of the Universe, but determined its basic parameters with high accuracy, propelling the transition of cosmology into high precision science area it is today.

CMB data analysis is a complex and challenging process as the cosmologically relevant signal is very small as compared to statistical uncertainties and needs to be disentangled from other signals of non-cosmological origins often larger by many orders of magnitude. As our science goals are becoming more subtle, they call for increasingly better precision, constantly driving the volumes of the CMB data sets. We expect that within the duration of this project new data sets will reach many hundreds of TeraBytes reaching or exceeding PetaByte soon after.

A typical modern CMB experiment scans the sky with a telescope registering a measurements up to many hundreds of times per second as shown by the Planck satellite animation below.

It does so with many thousands of detectors over period of many years. Every measurement consists of the sought-after cosmological signal, potentially distorted by the instrument itself, combined with contributions of non-cosmological origins and instrumental noise. The goal of CMB data analysis is to recover the former signal by suppressing or removing the effects of the latter and consequently to characterize statistical properties of the recovered cosmological part.

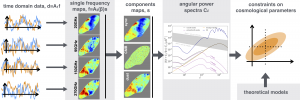

This is achieved by a sequence, often implicit, of different procedures, starting from a map-making, when the raw data as registered by the instrument, are turned into estimates of the sky signals, combining all signals of potentially different origin, and called usually maps. In the process all unwanted temporal effects are removed and noise averaged out, capitalizing on the redundancy of the initial data set.

The maps of the combined sky signal are then separated into maps of components of a different origin. This operation is referred to as a component separation.

One of the components produced by the component separation is the sought after pristine CMB signal, which we subsequently study statistically, estimating, for instance, its power spectrum or non-Gaussianity.

Given the power spectra, we can then test for different cosmological models, model selection, or estimate cosmological parameters, parameter estimation.

The flow chart of the CMB data analysis is shown below:

The advantages of this data analysis pipeline is that the data objects produced on each subsequent step are smaller and thus easier to manipulate. Indeed, we have typically many billions of measurements in the raw (aka time ordered) data set, millions of pixels in each produced map, thousands of coefficients of the power spectra and a handful (~6) of cosmological parameters. This unfortunately is typically offset by the fact that the statistical description of these objects becomes progressively more complex. Indeed, the statistical characterization of the noise is the simplest on the raw data level but the the raw data volume is huge, already daunting, and quickly growing. A potential way around is to perform as many of the data analysis steps in one go as only possible, thus treating the statistical properties of the intermediate data objects implicitly rather than explicitly. This could be particularly powerful if could be done starting from the raw data set. Unavoidably, this can be only done if one is able to manipulate the raw data set in a efficient manner. This can be only achieved by employing the processing power of some of the largest massively parallel supercomputers and on capitalizing on appropriate high performance algorithms as well as their efficient implementations, and statistical methods.

The B3DCMB project proposes to develop effective, comprehensive solutions to challenges posed by such a program by performing multidisciplinary research combining elements of statistics, high performance scientific computing in the cosmological context.